I pledge allegiance to Anthropic no more

It's time everyone stopped exclusively using Anthropic models

There's no doubt about it; when it comes to AI coding, Anthropic models have always been ahead.

Ever since I used Claude Sonnet 3.5 in Cursor, I have been amazed by the results. This isn't just an experience that was unique to me; many people on the internet and even work colleagues have had the same.

This fact has been so true that whenever a new coding-focused model is released by a company that isn't Anthropic, everyone asks the question: Is it as good as Claude? Typically, the answer is no. Both Sonnet 3.7 and Sonnet 4 have maintained Anthropic's stronghold; however, the recent release of models like Kimi K2, GLM 4.5, GPT-5, and the reduced price of these models has started to waver my allegiance to Anthropic models.

Before continuing, I want to get one thing off my chest. I predominantly use Sonnet and rarely use Opus. Sonnet has always been enough for me. That being said, I'm aware that not using any Opus models in a blind spot on my ability to judge all Claude models fully, but hopefully the points I raise in this post will still be valid despite that.

Claude is no longer far ahead#

Despite what you think about benchmark scores for AI models, they're the best way at a glance to judge a model's performance. Without, of course, trying the model yourself. I don't want to go into detail on all the coding benchmarks, but I personally think SWE-bench and LiveCodeBench are very good at telling if a model is good at real-world coding tasks. So if we take a look at scores for SWE-bench, remember that higher is better.

| Model | Score |

|---|---|

| Opus | 67.60 |

| GPT-5 | 65 |

| Sonnet | 64.93 |

| GPT-5 mini | 59.80 |

| o3 | 58.40 |

| Qwen3 | 55.40 |

Note: These scores are from SWE-bench verified using the mini-SWE-agent.

Also, (yes, this is still part of the note), GLM 4.5 claims to have a score of 64.2 using the OpenHands agent, which is better than the mini-SWE agent in my opinion. Kimi-K2 0711 gets a score of 43.80, but the newer one claims to have a score of 69.2.

I believe that number is inflated since it's not from the SWE-Bench leaderboard site, but I'll let you make your own judgement.

Anyway...Back to the SWE-Bench scores from the table above. Although Opus tops the charts, GPT-5 is not far behind, and it's also much cheaper. But we'll get to that later, for now, let's take a look at the LiveCodeBench scores.

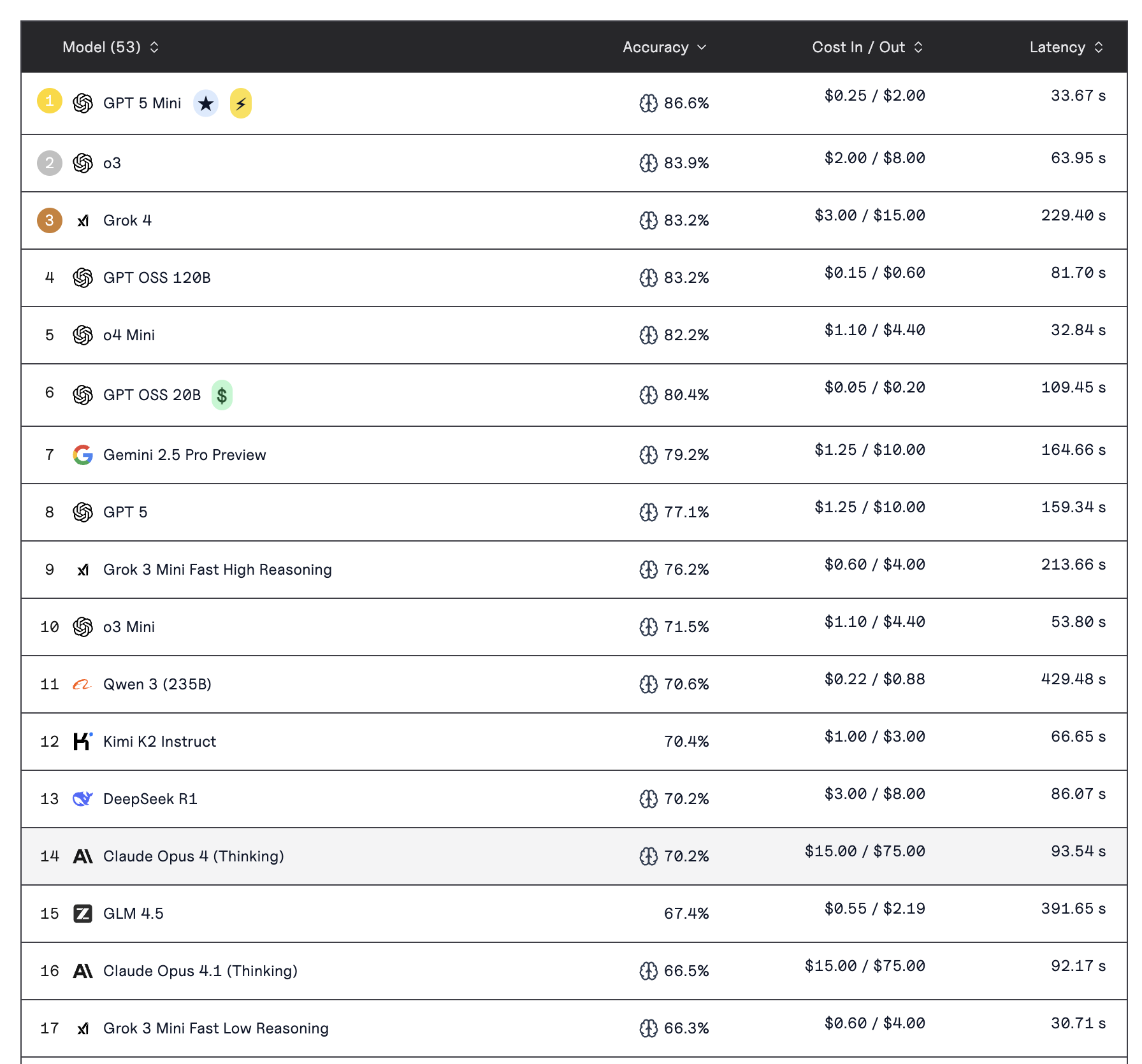

Claude Opus sits at 14, lower than GPT-5 (8), Kimi K2 (12) and GPT-5 Mini (1). I understand benchmarks don't tell the full story of a model's performance, and I'm sure there ways Claude outperform other models, but in my experience using Kimi, GLM and GPT-5, Claude is not that far ahead in terms of programming.

You may disagree, and you're completely entitled to, but you won't be able to disagree on the next point.

Claude is expensive #

In the grand scheme of things, Claude is not expensive. I mean, the fact that you can vibe code a portfolio website cheaper than buying a cup of coffee is amazing. However, when compared to other state-of-the-art models, Claude's pricing is very expensive.

Here is a table I've put together from OpenRouter data so it's per million input and output tokens.

| Model | Input ($) | Output ($) |

|---|---|---|

| Claude Opus 4.1 | 15.00 | 75.00 |

| Claude Sonnet 4 | 3.00 | 15.00 |

| GPT-5 | 1.25 | 10.00 |

| GLM 4.5 | 0.33 | 1.32 |

| Kimi K2 0905 | 0.29 | 1.19 |

Yes, Claude Opus is significantly more expensive than the others, but so is Sonnet. It's more than twice the price of GPT-5 for input tokens. What's amazing is that the open-source Chinese models are super reasonable, considering GLM 4.5 in particular is a fantastic model. I've spent a lot of time with Kimi-K2 0711, which is also brilliant, but not much with 0905.

So we've established that Claude models aren't way ahead of the competition, but are much more expensive. However, I know many of you use the Claude Pro plan, which, last I checked is $20 a month or $17/m if billed annually. This allows a maximum of 40 prompts every 5 hours, which is good. But if you compare that to the GLM coding plan, at $3 a month for 120 prompts every 5 hours, Claude seems very expensive.

Note: The GLM coding plan is $3 a month for the first month then $6 a month thereafter. The yearly plan is the best value at $36 for the first year.

Many believe the subscription model for LLMs can't be sustainable since someone can use 100 dollars of prompts on their 20-dollar a month plan, which may be one of the reasons Anthropic introduced weekly rate limits. But I'm fairly confident OpenAI can maintain that price because of their huge user base that can offset some of the cost, right?

Conclusion#

Don't get me wrong, I think Claude Code is great. I love all the explainer videos Anthropic have on YouTube, and I think the team are very vocal on Twitter/X. I just think Claude is no longer the 'top dog' when it comes to coding. There are other models that are as good as, or even better than it for specific things, i.e. GPT-5 for design.

So what should Anthropic do? I don't know. With their recent funding, they could make their models cheaper or focus on a more efficient model design to make it cheaper. Or they could keep the price as is, but offer more 'premium' quality models with features that a developer would want. Basically, Sonnet 4.5 can't be a slightly better version of 4; it needs to be something groundbreaking, and I'm not sure what that is.

Nevertheless, if you are a die-hard Anthropic fan, I think it's time to try out different models. You'll be surprised at how good they are, and you'll also save a lot of money in the process.

Happy coding 👋